Difference between revisions of "Vulcan/TextualEvidenceFinder"

(→Solr/Lucence Layer) |

(→Query Generator) |

||

| (5 intermediate revisions by 2 users not shown) | |||

| Line 7: | Line 7: | ||

== Components == | == Components == | ||

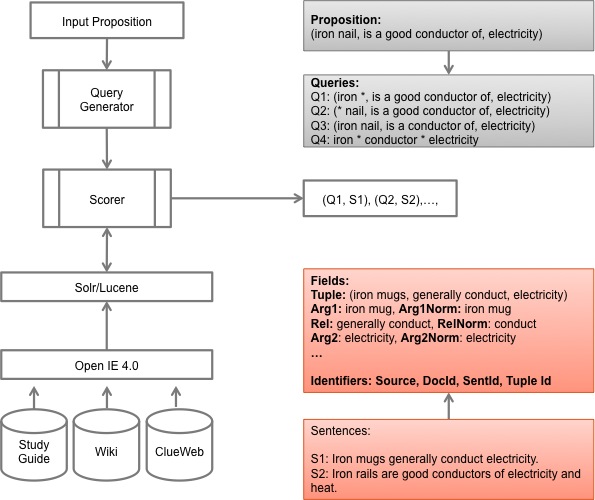

| − | [[File:weak-evidence-finder.jpg|frame|center|alt= | + | [[File:weak-evidence-finder.jpg|frame|center|alt=Textual Evidence Finder Details|System Architecture: Textual Evidence Finder]] |

=== Query Generator === | === Query Generator === | ||

| − | + | Queries are generated from the tuple given for the proposition, for example | |

| − | + | : <code>(iron nail, is a good conductor of, electricity)</code> | |

| − | |||

| − | |||

| − | </ | ||

| − | + | We'll do some preprocessing for stopword and function word removal, do something (TBD) about adjectives, and then execute different kinds of logical queries against indexed tuples that have been extracted from evidence source texts like the studyguide, glossary, clueweb(?), etc. The logical query types are | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | * Whole-tuple-match template queries match the full proposition tuple against indexed tuples (tuples extracted from source texts like the studyguide, glossary, clueweb, etc). Results from these queries would be considered the strongest evidence. For the given example: | |

| − | < | + | arg1:(iron AND nail) AND rel:(good AND conductor) AND arg2:(electricity) |

| + | * Partial-tuple-match template queries match some of the terms in the proposition tuple against the same fields of indexed tuples. For the given example: | ||

| + | arg1:(iron OR nail) AND rel:(conductor) AND arg2(electricity) | ||

| + | * Keyword-match queries match any keywords from the proposition tuple against any field(s) of the indexed tuples. For the given example, where the <code>text</code> field is the concatenation of fields <code>arg1, rel,</code> and <code>arg2</code>: | ||

| + | text:(iron OR nail OR conductor OR electricity) | ||

| − | + | We would also perform some (or all?) of the above queries with synonym expansion, and later (maybe) with hypernym expansion. | |

| − | + | There still are open questions around: | |

| − | + | * Where and how to use normalized versions of terms (once we have both lemmatized and literal values indexed for <code>arg1, rel,</code> and <code>arg2</code>. | |

| + | * How to handle adjectives. | ||

| + | * How to handle relation negation and polarity | ||

| + | * What to do about headwords. | ||

| + | * How exactly to score query results. Initially we'll score results by rank from the query classes above along with term-overlap scores. | ||

| − | + | === Solr/Lucence Layer === | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

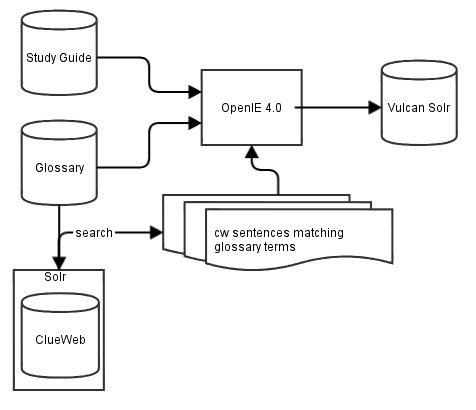

| − | + | A general outline of the process to build the solr index for Vulcan. | |

| − | + | [[File:Vulcan_extractions.jpg]] | |

To be filled in... | To be filled in... | ||

Latest revision as of 22:22, 3 September 2013

I/O

Input: A Proposition [A natural language sentence + Open IE tuples from the sentence.]

Output: A list of query/score pairs representing evidence for the proposition.

Components

Query Generator

Queries are generated from the tuple given for the proposition, for example

-

(iron nail, is a good conductor of, electricity)

We'll do some preprocessing for stopword and function word removal, do something (TBD) about adjectives, and then execute different kinds of logical queries against indexed tuples that have been extracted from evidence source texts like the studyguide, glossary, clueweb(?), etc. The logical query types are

- Whole-tuple-match template queries match the full proposition tuple against indexed tuples (tuples extracted from source texts like the studyguide, glossary, clueweb, etc). Results from these queries would be considered the strongest evidence. For the given example:

arg1:(iron AND nail) AND rel:(good AND conductor) AND arg2:(electricity)

- Partial-tuple-match template queries match some of the terms in the proposition tuple against the same fields of indexed tuples. For the given example:

arg1:(iron OR nail) AND rel:(conductor) AND arg2(electricity)

- Keyword-match queries match any keywords from the proposition tuple against any field(s) of the indexed tuples. For the given example, where the

textfield is the concatenation of fieldsarg1, rel,andarg2:

text:(iron OR nail OR conductor OR electricity)

We would also perform some (or all?) of the above queries with synonym expansion, and later (maybe) with hypernym expansion.

There still are open questions around:

- Where and how to use normalized versions of terms (once we have both lemmatized and literal values indexed for

arg1, rel,andarg2. - How to handle adjectives.

- How to handle relation negation and polarity

- What to do about headwords.

- How exactly to score query results. Initially we'll score results by rank from the query classes above along with term-overlap scores.

Solr/Lucence Layer

A general outline of the process to build the solr index for Vulcan.

To be filled in...

- Index

- What corpora will be indexed? [Study guide, definitions and sentences covering glossary terms]

- What is the index structure? [Each tuple will be a Lucene document?]

- What is in a document? [Arg1, Arg1 Norm, Rel, Rel Norm, ...]

- ...

- Search

- How to find tuples that match template queries? Use bag-of-words search and filter tuples that match filter?

- How can we use synonyms or other paraphrase resources?

Open IE 4.0

Use Open IE 4.0.