Multir

This is a page about Multir.

Contents

- 1 Timetable

- 2 Documents

- 3 March 31 2014 Update

- 4 March 26 2014 Update

- 5 March 21 2014 Update

- 6 March 20 2014 Update

- 7 March 11 2014 Update

- 8 March 7 2014 Update

- 9 February 27 2014 Update

- 10 February 13 2014 Update

- 11 February 5 2014 Update

- 12 January 16 2014 Update

- 13 January 8 2014 Update

- 14 January 6 2013 Update

- 15 December 18 2013 Update

- 16 December 12 2013 Update

- 17 December 11 2013 Update

- 18 December 9 2013 Update

- 19 December 6 2013 Update

- 20 December 4 2013 Update

- 21 November 27 2013 Update

- 22 November 26 2013 Update

- 23 November 12 2013 Update

- 24 November 8 2013 Update

- 25 Multir Instructions

- 26 Multir Data

- 27 Goals

- 28 Weekly Logs

- 29 Specifications

- 30 System Architecture

- 31 General Relation Extraction Architecture (from Mitchell Koch)

- 32 Revised System Architecture

Timetable

Documents

March 31 2014 Update

Stephen's Update

- Analysis of why partitioning is helping is at /projects/WebWare6/Multir/Evaluations/Analysis-Partition_vs.NoPartition.pdf

- The overgeneralized features that get high weight without partitioning have low or even negative weight with partitioning.

- Possibly this is because partitioning gives negative training that is more on point.

- Summary of what we have learned so far and plans for next week's experiments at /projects/WebWare6/Multir/Evaluations/Plans_for_Experiments.pdf

March 26 2014 Update

John's Update

- This Week

- Formatted 2013 UIUC Wikifier Data

- Ran Partition Experiments, initial results here [1]

- Relevant Evaluation Files are located at /projects/WebWare6/Multir/Evaluations/NERPartitionEvaluations

- Next Week

- Create a more in depth analysis of the results of partitioning

- Continue Development on a Wrapper for the 2013 UIUC Wikifier

- Modify Multir Learning Module to make use of specific negative labels like N-/people/person/nationality

- Combine Partitioning with Generalized Features

March 21 2014 Update

- Bob's update this week [2]

March 20 2014 Update

- John's update from this week with results of new method for negative training [3]

March 11 2014 Update

- Summary of Multir Experiments so far [4]

March 7 2014 Update

- Running 2013 UIUC Wikifier with Relational Inference on Hadoop, approximate finish date is March 16.

- Modified Multir Pipeline by implementing type signature partitioning for different models and avoiding false negatives [5]

- At test time two candidate arguments will be evaluated by the model that matches their type signature. This will decrease the negative example noise.

- The new negative example approach helps to reduce the number of false negatives

February 27 2014 Update

- Analysis of implementing FIGER types into Multir Pipeline [6]

February 13 2014 Update

- After noticing the difference in argument type distributions between positive and negative examples as shown in the report from February 5, we decided to try out some different negative example training algorithms [7]. The results do not show one dominant algorithm but they indicate that having a large base of noisy negative training data helps eliminate lots of false positives, adding to this base a set of near miss negative examples would likely boost precision.

February 5 2014 Update

- Analysis of Multir Features [8]

January 16 2014 Update

- Changed the Evaluation Scheme and compared NEL vs NER Multir approaches [9]

January 8 2014 Update

- Stephen's Analysis of a sample of Multir Training Data showed that the majority of the training data does not express the relation it is annotated with.

- His annotations are here [10]

- Implemented Named Entity Linking based Argument Identification and Relation Matching and trained a new Model with this approach, the results are here [11]

January 6 2013 Update

- Working On Running the UIUC 2013 Wikifier over the corpus with Hadoop

- Adding Support to the Multir Framework for incorporating Named Entity Linking data and Coreference data

December 18 2013 Update

- Original Multir Distant Supervision Error Analysis [12]

December 12 2013 Update

- Reimplemented Multir Evaluation Results ignoring /location* relations [13]

December 11 2013 Update

- Emulated Orginal Multir Sentential Extraction Performance Results [14]

December 9 2013 Update

- Validated train/test feature generation

- Ran Sentential Extractor on DEFT benchmark Results were precision = 28% and recall = 5 %

- December 9 2013 Multir Report [15]

- Will Run on original Multir Sentential Annotations shortly.

December 6 2013 Update

- Created Corpus Information Files With Newer Models at /projects/WebWare6/Multir/MultirSystem/files/09NWNewModelsCorpus/

- Files include sentences.cj.12-13, sentences.ner, sentences.pos, and sentences.deps

December 4 2013 Update

- Cleaned charniak-johnson parser output, there were ~ 60 sentences that were not parsed out of 27M. The file is at /projects/WebWare6/Multir/MultirSystem/files/sentences.cj.12-13

November 27 2013 Update

- Ran Newer version of charniak-johnson parser on the hadoop cluster output is at /projects/WebWare6/Multir/MultirSystem/files/sentences.cj.11-13.

- Currently writing more Hadoop jobs for pre-parse processing and post-parse processing.

November 26 2013 Update

Please see the updated development schedule to see how progress has been made. I've recently been slowed down by writing a Hadoop application for the parser. I hope to have all of the corpus processing running before the end of the week so that next week I could evaluate the performance of the sentential extractor on a set of 100 sentences I annotated with Freebase relations as a DEFT deliverable.

The complications of generating information on a large corpus have convinced me that creating an API for doing such a process would be difficult and maybe a complicating factor. Stephen suggested that I write detailed documentation and specification for anyone who wishes to generate the same types of data from a different corpus.

- Progress

- Developed a Document Extractor that uses the same training time algorithms for argument identification, sentential instance generation, and feature generation at test time and returns a set of relations.

- Developed Single-Machine Preprocessing Corpus Code Divided into 3 batch processes:

- 1. Pre-Parse Processing (NER,Lemmatiziation,POS) computing time is ~1 day on a single machine

- 2. Parsing (CharniakJohnson parser 6 sentences/sec) ~6 days on a single machine

- 3. Post-Parse Processing (Dependency parse generation) ~8 hours on a single machine

- In Progress

- Writing Hadoop application for running the CharniakJohnson parser

- Issues

- There is a limit to the size of the amount of training data that the current Multir implementation can handle. We recently built a training set with 17M unique features and 45 unique relations, this proved to large for the training algorithm implemented by Multir, it ran out of memory with 30GB on the heap. Exploring alternative training options or optimizations for larger data sets will be useful.

November 12 2013 Update

- Ran Aggregate Test with training/test data generated by new interface, results here

November 8 2013 Update

- I have implemented a Corpus abstraction in the vein of Mitchell's suggestion in that it is an Iterator over Documents in the corpus where a Document is Stanford's Annotation object.

- This iterator is used to do distant supervision, a pluggable Algorithm Identification module takes as input an Annotation sentence and an Annotation document. The Algorithm Identification algorithm has access to all of the information in these data structures (NER, Dependency parses, etc...)

- A similar Negative Examples module generates negative examples for entity pairs using the baseline negative example finding algorithm that Sebastien Riedel used in their paper.

- A verbose feature generation output is generated from the feature generation module. The feature generation module is a pluggable module like Argument Identification that takes the offsets of two arguments, their kbids, and their Annotation sentence and Annotation document.

- I am now ready to train, test, and validate a Multir model that uses the training and test data that I've generated.

Once this is done I can begin working on porting the preprocessing code to an in-memory functionality that will support building a sentential extractor app that will go towards the web app and improving our DEFT implementation of the Multir algorithm.

Multir Instructions

- Instructions page at [16]

Multir Data

- projects/WebWare6/Multir/MultirSystem/files/derbyCorpus/FullCorpus : Full 2009 News Wire Corpus with Named Entity Links from 2013 UIUC Wikifier

- projects/WebWare6/Multir/MultirSystem/files/derbyCorpus/FullCorpus-WikipediaMiner : Named Entity Links are from Wikipedia Miner

Goals

1. Modularize and unify distant supervision and multir code bases

- Simplify instance representation

- Separate distant supervision and feature generation code

2. Add diagnostics for inspection of training/test instances and feature generation

Milestones/Goals

- Generate Distant Supervision data from preprocessed corpus. (10/29)

- Run Preprocessing code on new textual data (1/1)

- Provide Interface for NEL with external KB in Argument Identification (11/6)

- Run Original Multir Algorithm with new implementation (11/8)

- Run Multir with NEL-Argument Identification

Weekly Logs

- Notes for the week of October 7 2013

- Notes for the week of October 14 2013

- Notes for the week of October 21 2013

Specifications

- Input

- 1. Training Corpus

- 2. Semantic DB

- 3. Target Relation Set

- 4. Parameters for Distant Supervision and Training

- Instance Representation

| MentionNumber | Entity1 | Entity2 | Sentence | Feature | Feature | Feature |

|---|---|---|---|---|---|---|

| 0 | E1 | E2 | This is a sentence | feature1 | feature.. | featureN |

| 1 | E1 | E2 | This is a sentence | feature1 | feature.. | featureN |

| ... | E1 | E2 | This is a sentence | feature1 | feature.. | featureN |

| k | E1 | E2 | This is a sentence | feature1 | feature.. | featureN |

| 0 | E1 | E3 | This is a sentence | feature1 | feature.. | featureN |

| 0 | E1 | E... | This is a sentence | feature1 | feature.. | featureN |

| 0 | E1 | Ek | This is a sentence | feature1 | feature.. | featureN |

| 0 | E... | Ek | This is a sentence | feature1 | feature.. | featureN |

| 0 | Ek | Ek | This is a sentence | feature1 | feature.. | featureN |

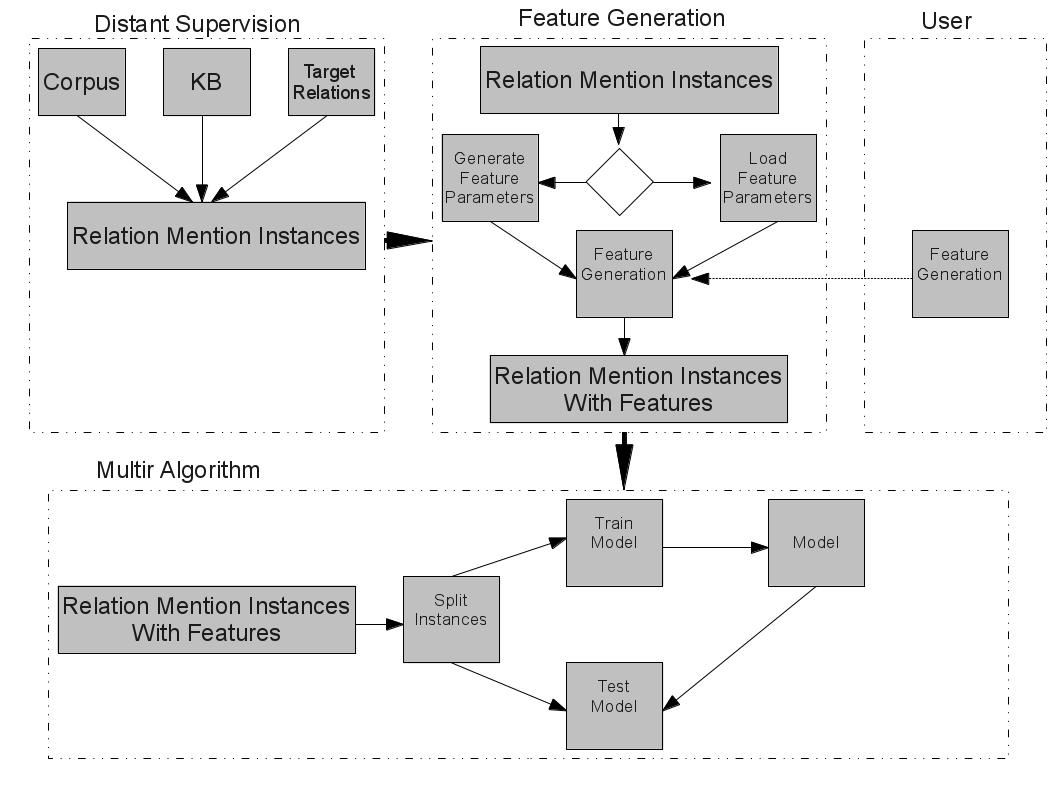

System Architecture

Multir System Architecture Draft 1

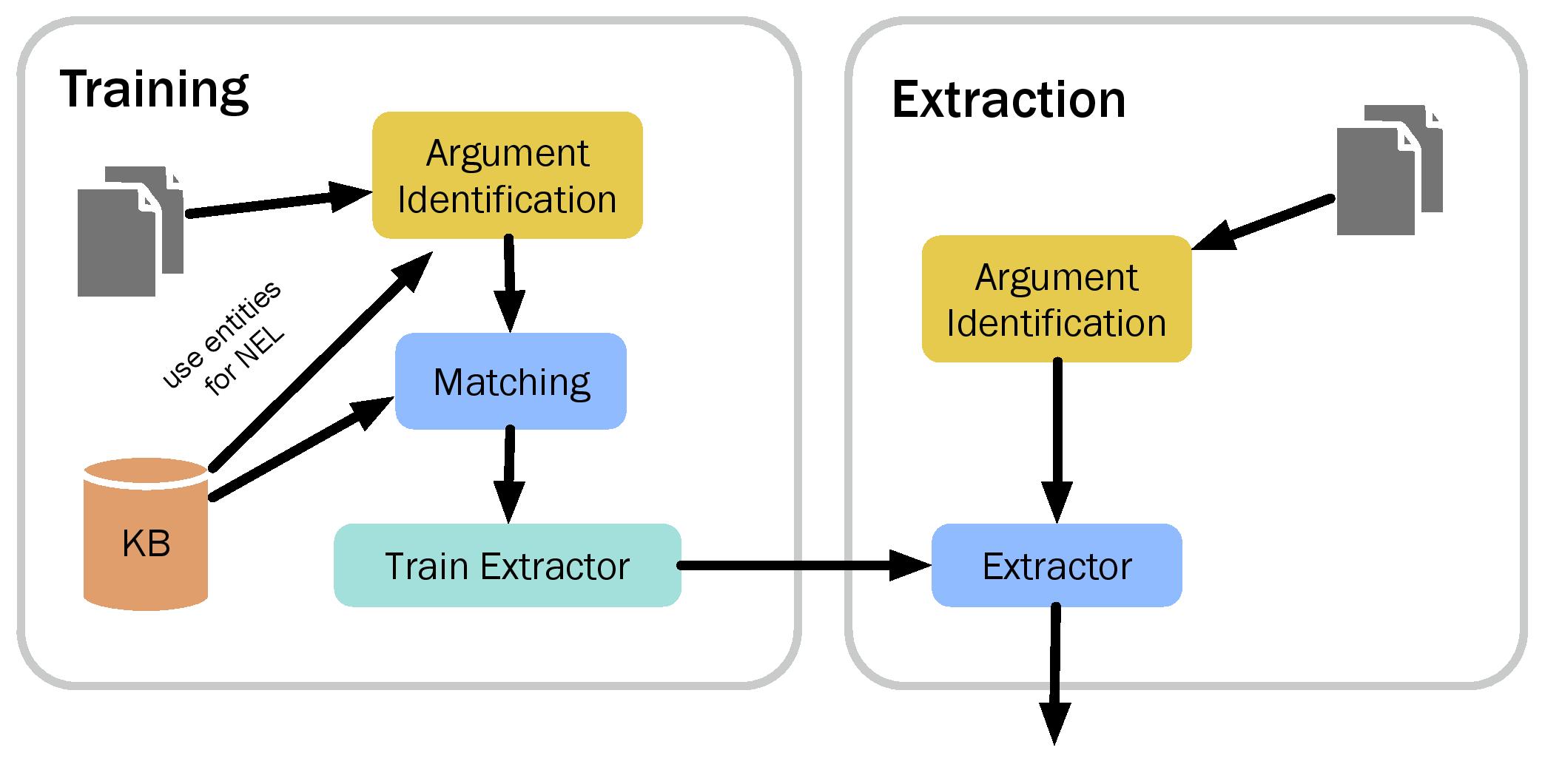

General Relation Extraction Architecture (from Mitchell Koch)

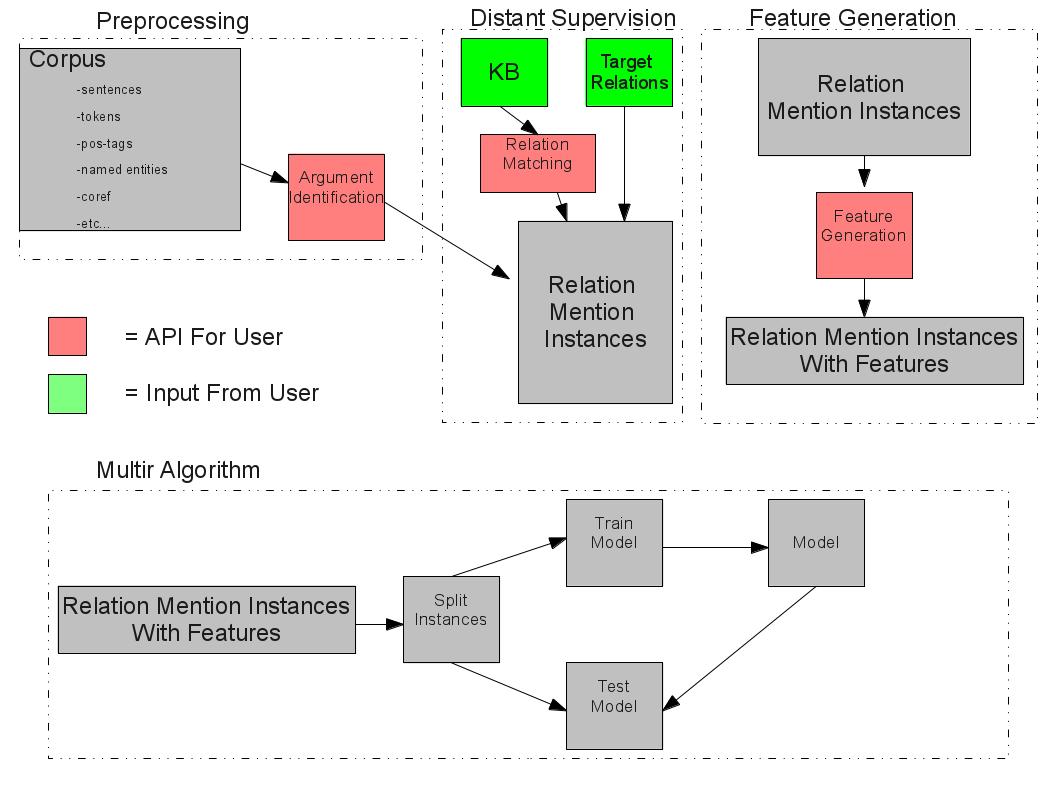

Revised System Architecture

Multir System Architecture Draft 2